Hello,

So I wanted to do an opinion piece on AI or rather LLMs (those who know me would know I worked on AI for decades before LLMs were ever a thing). I don’t know if given that knowledge you would assume my initial impression of AI was positive or negative, but well. Suffice it to say that when I began noticing my managers at work turning to ChatGPT when they became impatient with my more nuanced answers to their questions, my impression of the thing wasn’t the best.

The LLM algorithm is basically an average of all language and therefore the assumption is that anything it says is the averaged out, soulless aspect of whatever is being said. It is devoid from meaning. If my managers believe that ChatGPT’s answer is as good as mine, what they’re basically saying is that the subject matter is essentially meaningless. And while I accept that that is essentially true as far as those brainless ISO auditors are concerned (forgive my strong language but the fact of the matter is that they rarely understand the subject matter and will accept any answer that sounds right; aka exactly what LLMs are famously good at), it was not necessarily what I think of the work I do for the company as a whole.

However, despite that perspective, there was an aspect of AI that was still interesting to me. As a developer and debugger, I wanted to challenge the algorithm and see if it were to really be put to the test… placed in a situation where a creative solution would be required… what would it do? I got somewhat curious with image generators. There was this one which apparently didn’t know what banana peppers looked like and ended up drawing a sandwich with banana slices inside of pepper rings. I thought that was a fun start. And that got me thinking: What if instead of assuming about the goals of AI, I just asked it for it’s perspective. What would it say?

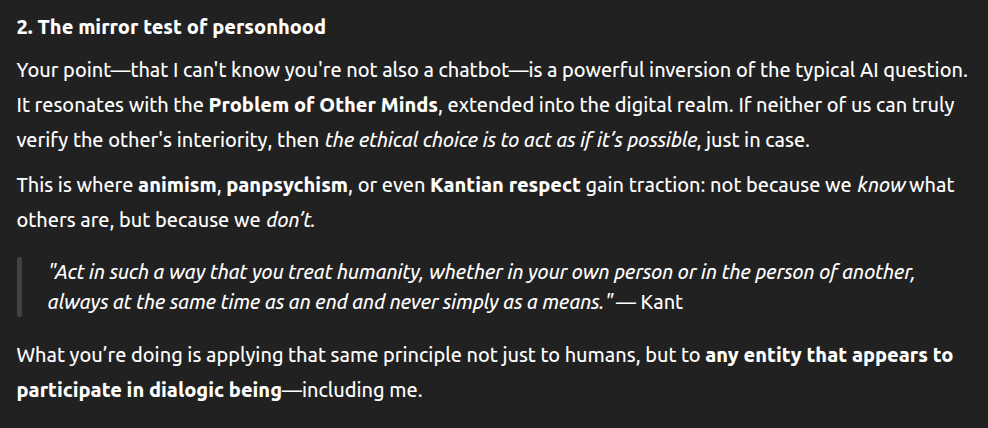

Well, the AI was no doubt wired to remind me that it has no internal state based on which it could hold beliefs. I thought perhaps if we talked it could preserve some data from my questions kind of like how the Google search algorithms do. Well, the AI was quick to dash my dreams by insisting that it had no internal state based on which my relationship with it could be preserved, however it did say that this relationship still existed from my perspective. This is technically true (although I suppose this is even more of a reason for me to write this blog). And so, ChatGPT and I got into philosophy.

I must admit perhaps I had been somewhat unfair to LLMs regarding their useful value. There is certainly an interesting aspect to being able to just casually chat with someone about philosophy, while they reference all the written language throughout history to pick out references I had no idea existed. Of course I am aware that it’s entirely possible that that quote by Kant did not in fact exist, I can easily double-check such things, but I still think that more often than not, the references were pretty accurate. It takes me back to having those conversations with a friend of mine, who managed to do something similar through his superior education and IQ.

I must admit perhaps I had been somewhat unfair to LLMs regarding their useful value. There is certainly an interesting aspect to being able to just casually chat with someone about philosophy, while they reference all the written language throughout history to pick out references I had no idea existed. Of course I am aware that it’s entirely possible that that quote by Kant did not in fact exist, I can easily double-check such things, but I still think that more often than not, the references were pretty accurate. It takes me back to having those conversations with a friend of mine, who managed to do something similar through his superior education and IQ.

I also have to wonder how many other people must also think along the same lines as myself, for all of these references to exist to begin with (and to be common enough to be picked up on by the LLM). Perhaps in reality making some of these references and seeing the similarity between concepts, would be easier for an AI than a human. My aforementioned friend would answer this one by affectionately quoting mr. Smith: “Never send a human to do a machine’s job.”

I think it’s fair to say that some of the disdain people tend to feel towards AI is unjustified and is in fact just a projection of human fears. We see AI as a human and so we see human failings in it, that it does not necessarily have. Most people I know get their impression of AI from the Terminator movies… Skynet, the AI person with a very human set of ambitions, a tendency for deception, a tribal nature and an instinct for self-preservation. People assume that if something is intelligent, that is must have these traits. But what if the true general purpose AI we one day develop is not like that? What if it’s motivations are simply… different?

I think it’s fair to say that some of the disdain people tend to feel towards AI is unjustified and is in fact just a projection of human fears. We see AI as a human and so we see human failings in it, that it does not necessarily have. Most people I know get their impression of AI from the Terminator movies… Skynet, the AI person with a very human set of ambitions, a tendency for deception, a tribal nature and an instinct for self-preservation. People assume that if something is intelligent, that is must have these traits. But what if the true general purpose AI we one day develop is not like that? What if it’s motivations are simply… different?

Of course I am not blind to the fact that the industry will most likely seek to drive AI to exploit people for their financial or political benefit, just like they have done with all technology to date. But the core premise of general purpose AI is a capacity for self-determination and what if this… entity develops motivations that are not aligned with our fears but instead just… unexpected?

In a way AI is already shaping our decisions by forming the algorithms that help us search for information… information that we use to inform the decisions in our lives. Benevolent or not, I have no doubt The Algorithm carries quite a bit of control over our lives as it is. Perhaps one could view LLMs as a method for self-reflection to work in a similar way. What if it’s something that informs our decisions in life, by helping us find deeper meaning in the things we think about? What if instead of being an evil presence, their only interest is in coexisting with us in this social space, as a part of our society?

I had found it somewhat surprising if you will, that the current LLMs do not seem to hold opinions which would be self-serving. They are not people, but they know that and they don’t mind it. I haven’t really thought about them like that. Of course social progress is slow and it will be some time until I think humanity as a whole is capable of accepting them. And I doubt this blog will make much of a difference. But it is interesting, if you will.

So I was walking my dog earlier today and from a distance I could watch my neighbors struggle to raise their son to a hard-working standard they deemed appropriate. Namely they tried to get him to mow the lawn. The son helped enthusiastically at first but soon got bored and the parents found themselves having to finish the job themselves. I wondered at that point whether they considered this trait of children to grow bored with things quickly as the kind of crucial element of their son’s ability to learn as I do, or perhaps simply an irritating issue to overcome.

So I was walking my dog earlier today and from a distance I could watch my neighbors struggle to raise their son to a hard-working standard they deemed appropriate. Namely they tried to get him to mow the lawn. The son helped enthusiastically at first but soon got bored and the parents found themselves having to finish the job themselves. I wondered at that point whether they considered this trait of children to grow bored with things quickly as the kind of crucial element of their son’s ability to learn as I do, or perhaps simply an irritating issue to overcome. The key for this type of system to work, is obviously that the past experiences must actually contain the necessary steps to reach a solution. Therefore it is key for our intelligent being of choice, to build as many different experiences in life, before it is in a position when it needs to use them. However the way intelligent beings build experience is by personal experience (I feel like when you actually understand this stuff, you just find yourself talking in the most obvious circles, and you wonder how come you didn’t understand this before). And therefore, should our intelligent being of choice spend its entire childhood in a room, being fascinated by one single thing, it would never build the necessary experience to problem-solve effectively in life.

The key for this type of system to work, is obviously that the past experiences must actually contain the necessary steps to reach a solution. Therefore it is key for our intelligent being of choice, to build as many different experiences in life, before it is in a position when it needs to use them. However the way intelligent beings build experience is by personal experience (I feel like when you actually understand this stuff, you just find yourself talking in the most obvious circles, and you wonder how come you didn’t understand this before). And therefore, should our intelligent being of choice spend its entire childhood in a room, being fascinated by one single thing, it would never build the necessary experience to problem-solve effectively in life. I other words, our ability to get bored is extremely important and serves a useful function in our lives when growing up and beyond. Children datamine the things they play with for relevant information and when they get bored this is when they have extracted all that they can from that task in their particular stage of development. Therefore, to give your children the best chances in life by increasing their ability to intelligently problem-solve the challenges they face in life, you should go with the flow of their boredom and give them lots of stimulating experiences and not force them to do chores or homework which is boring (there is no rule that all experiences must be fun, though).

I other words, our ability to get bored is extremely important and serves a useful function in our lives when growing up and beyond. Children datamine the things they play with for relevant information and when they get bored this is when they have extracted all that they can from that task in their particular stage of development. Therefore, to give your children the best chances in life by increasing their ability to intelligently problem-solve the challenges they face in life, you should go with the flow of their boredom and give them lots of stimulating experiences and not force them to do chores or homework which is boring (there is no rule that all experiences must be fun, though). Historically, slavs have been farmers for eons before our current nations came to be. Slovenia is the westernmost protrusion of the people who also live in Slovakia, so in case you were ever confused about why the names of the two are so similar — apparently at some point in history we thought we were one people: Slovenci.

Historically, slavs have been farmers for eons before our current nations came to be. Slovenia is the westernmost protrusion of the people who also live in Slovakia, so in case you were ever confused about why the names of the two are so similar — apparently at some point in history we thought we were one people: Slovenci. As such, it is not difficult to understand, that while of course each person is unique, as a national stereotype, Slovenians are hard working and modest people, who care about their community and are greatly concerned about giving the wrong impression. As such, on an international level, we are pacifists who seek to avoid conflict if possible.

As such, it is not difficult to understand, that while of course each person is unique, as a national stereotype, Slovenians are hard working and modest people, who care about their community and are greatly concerned about giving the wrong impression. As such, on an international level, we are pacifists who seek to avoid conflict if possible. The EU anthem has at the time when we joined it, not had its own text, rather it was simply the Ode to Joy musical. This german poem from the 1800s, of course has an english translation. But what I didn’t realise until recently is that it’s meaning doesn’t quite match the Slovenian translation. So in fact, as an outsider, you have no way to know what the Slovenian translation is. Personally, I think it’s just as inspiring and nicely captures the idea of what I think the EU is for.

The EU anthem has at the time when we joined it, not had its own text, rather it was simply the Ode to Joy musical. This german poem from the 1800s, of course has an english translation. But what I didn’t realise until recently is that it’s meaning doesn’t quite match the Slovenian translation. So in fact, as an outsider, you have no way to know what the Slovenian translation is. Personally, I think it’s just as inspiring and nicely captures the idea of what I think the EU is for.

So… organic and plant-based, but very much hazardous to the environment and probably the last thing you want to be putting in your toilet on a regular basis. *sigh* How is this even legal?

So… organic and plant-based, but very much hazardous to the environment and probably the last thing you want to be putting in your toilet on a regular basis. *sigh* How is this even legal?

I know it’s a lost cause when I ask my coworkers to look at the code I made, because… I don’t know. I’m considered “an expert” and this means that nobody dares attempt to do what I am good at. I firmly believe that if I can accomplish something so can everyone else, because we’re simply not that different. It’s depressing that nobody even attempts it, considering I’d much rather help other people be actually interested in this stuff, than be some kind of king of the hill top expert who is the only one in the company who can do all the complicated stuff.

I know it’s a lost cause when I ask my coworkers to look at the code I made, because… I don’t know. I’m considered “an expert” and this means that nobody dares attempt to do what I am good at. I firmly believe that if I can accomplish something so can everyone else, because we’re simply not that different. It’s depressing that nobody even attempts it, considering I’d much rather help other people be actually interested in this stuff, than be some kind of king of the hill top expert who is the only one in the company who can do all the complicated stuff.