Hello,

I’m going to be upfront with you, I’m a dog owner, but no veterinarian or anything. I know a thing or two about how biology works and I love my doggo to death. I also do something people don’t usually do for dogs which is that I respect my doggo as a person.

Of course every time my doggo wants a share of whatever it is I am having, I look up on the web whether or not the thing is safe for dogs. And more often than not I find one of those auto-generated websites that claim that every single thing in this universe is bad for dogs. On the one hand (paw?) I get it, a lot of things are bad for dogs, including a few unexpected things. But this general holy war on giving dogs any kind of food is just straight up wrong.

Take for example onions. A quick google search will give you something like:

Onions contain a toxic principle known as N-propyl disulfide. This compound causes a breakdown of red blood cells, leading to anemia in dogs.

Ooookay… that sounds plenty creepy. All those sauces with onions in them are off-limites to dogs, better safe than sorry right? Wrong.

N-propyl disulfide is the thing that makes you tear up when cutting onions. Once the onions are cooked and no longer eye-watering they are perfectly safe. Let’s take a look at the toxic doses: How much of this does a dog actually have to eat in order for it to be toxic? Well my 30 kg canine friend would have to eat two whole onions raw for it to cause negative consequences.

I think if I ate two whole raw onions, I’d probably be on my way to a hospital too. I know dogs will eat anything, but whole raw onions? Really? Well keep your dogs out of your raw onions then! Many of these articles you can find on the subject are plain wrong because they don’t understand the subject they are writing about. They mix up things like raw fruit weight and toxic compound weight. A simple google search on the things they are saying can often bring you significantly closer to the truth.

And yeah, things may be different for your 2 kg chihuahua. I’m talking about dogs here.

Let’s take another example. The famous chocolate. People are super-duper resistant to giving dogs any chocolate whatsoever. Toxic, right? Well, actually chocolate is only 3 times as toxic for dogs as it is for humans. Made you choke on your Nutella, right?

Mostly the reason why the toxicity of chocolate is so over exaggerated is because dogs, on average, weigh less than we do and toxicity is usually per kilogram. So what might not be a problem for a 70 kg human might be a big problem for a 10 kg pup.

But you see, humans are not all 70 kg, have you ever considered that your Xmas gift of chocolate might kill your little cousin? A typical 5 yearold weighs under 20 kg, so a toxic dose for them is about 0.52 g of theobromine, which is about 40 grams (half a bar) of dark chocolate or 300 grams of milk chocolate (two bars). That amount is toxic, ever wondered about that?

The general reasonable rule, if you have a medium-sized 30kg dog like I do, is asking yourself “Would 10 times the amount give me a stomach-ache?” So for example if your dog wants to try a Jaffa cake, eating ten of those, well you’ve already done that and you’re fine, so go ahead. 10 jars of Nutella though? Might reconsider. The math behind this is that dogs are 3 times as sensitive and 1/3rd your weight, 3 times 3 is 9 which is roughly 10.

Of course that being said, chocolate is toxic and you should avoid it yourself.

Lastly let me address the last pet peeve in this category, which is articles saying fat and salt is bad for your dog. This is the ultimate double-standard. Fat and salt are exactly as harmful to humans as they are to dogs.

Of course if you have a small dog you should keep him out of your potato chips. The same “per kg” rule applies as above. One bag of potato chips might as well be 10 bags of potato chips to a small dog and, let’s be honest, 10 bags of potato chips consumed within a day would land you in the hospital just the same.

However what those “is X bad for your dog” sites say, that your dog can’t have any bit of fat because it’s bad for them, that’s plain wrong. The thing to consider with dogs and fat is that dogs, just like humans, have a set amount of emulsifier in their digestive system, called bile. A set amount of bile can digest a set amount of fat. How much bile you have depends on how much fat you usually eat (the body prefers not to waste it), but of course you can never have enough bile for a bucket of lard, because there are limits on how large your gallbladder will get per your body size. Undigested fat passes through the digestive system intact, causing stinky oily diarrhoea.

Most dogs get almost no fat in their food, because dog food (wet dog food or kibble) is mostly carbohydrates (that jelly is actually an inorganic oil derivative, sorry). If your dog has had nothing but dog food it’s entire life, every time you give it something fatty to eat he will have diarrhoea. This is an inevitable fact. However if you regularly give your dog small amounts of fat (omega-3s! recommended for fur health!), he will be accustom to it and fatty treats will cause no harm… Unless of course you give way too much.

Thank you for reading my rant. 🙂 For a reward, have my doggo’s puppy eyes. I promise, she’s a healthy, happy doggo, who gets lots of love and treats of all kinds.

Chocolate she doesn’t like very much because it’s bitter.

LP,

Jure

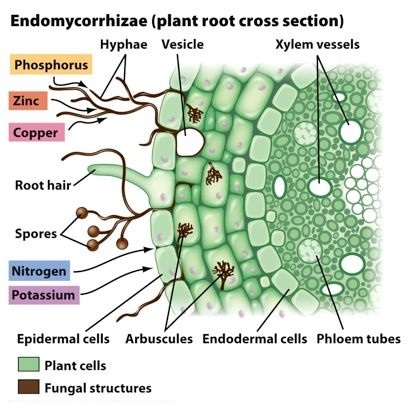

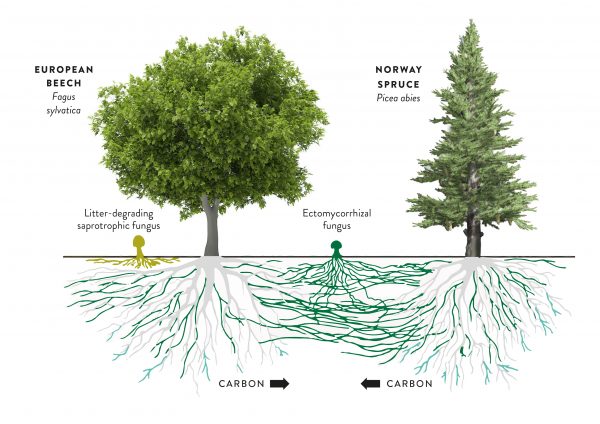

This makes fungi a lot better at certain tasks than both plants and animals. Notably in the case of plants, they are able to extract minerals and water out of soils that are otherwise either toxic or unavailable to the plant. The relationship between plant roots and fungi is very old and both plants and mycorrhizal fungi have specialised organs that serve to interface between the two species. The plant uses these organs to get nutrients and water from the fungus, and the fungus feeds of of hexoses, a special type of sugar produced by the plants specifically for the fungus.

This makes fungi a lot better at certain tasks than both plants and animals. Notably in the case of plants, they are able to extract minerals and water out of soils that are otherwise either toxic or unavailable to the plant. The relationship between plant roots and fungi is very old and both plants and mycorrhizal fungi have specialised organs that serve to interface between the two species. The plant uses these organs to get nutrients and water from the fungus, and the fungus feeds of of hexoses, a special type of sugar produced by the plants specifically for the fungus. What I like to think (which is to say there is some foundation of this in the current research into mycorrhiza, but I am not a scientist) is that in nature, there are all of these different systems and what nature ends up using is whatever works best in a particular scenario. If different fungi are competing for plants and therefore only the most successful survive, some of the time this means that perfectly socialist networks will be prevalent. And since we have observed examples of this being the case, I would say it’s safe to say this kind of system can work. The plants and the fungi are able to determine this on their own without human interference, even in a system where abuse is quite possible and is probably even advantageous in some cases. That is, there are both plants and fungi that take advantage of the network, because they can. But it seems in the grand scheme of things, networks that do not have such individuals work better and out-compete networks that do contain exploitation.

What I like to think (which is to say there is some foundation of this in the current research into mycorrhiza, but I am not a scientist) is that in nature, there are all of these different systems and what nature ends up using is whatever works best in a particular scenario. If different fungi are competing for plants and therefore only the most successful survive, some of the time this means that perfectly socialist networks will be prevalent. And since we have observed examples of this being the case, I would say it’s safe to say this kind of system can work. The plants and the fungi are able to determine this on their own without human interference, even in a system where abuse is quite possible and is probably even advantageous in some cases. That is, there are both plants and fungi that take advantage of the network, because they can. But it seems in the grand scheme of things, networks that do not have such individuals work better and out-compete networks that do contain exploitation.

There is a lot of mysticism online related to artificial intelligence. For a lot of people it’s little more than a science fiction level fascination and you can immediately tell this is the case based on their persistent and senseless recycling of Asimov’s laws, which originate from 60’s science fiction and are not applicable and never will be applicable to any real-world software program. I am not one of those people. I view AI from the perspective of a software developer and there is no place in my understanding of AI, for overly vague abstractions that are made up and have no translation in real life machine code.

There is a lot of mysticism online related to artificial intelligence. For a lot of people it’s little more than a science fiction level fascination and you can immediately tell this is the case based on their persistent and senseless recycling of Asimov’s laws, which originate from 60’s science fiction and are not applicable and never will be applicable to any real-world software program. I am not one of those people. I view AI from the perspective of a software developer and there is no place in my understanding of AI, for overly vague abstractions that are made up and have no translation in real life machine code.

It relates to a phenomena of SPAM comments on the Internet, on random websites, seemingly about key lime pie (pictured), sentences eventually devolving into pornographic proportions of nonsense. The thing is, that while a spambot may be to blame, it’s difficult to explain why they would be advertising pie of all things, and why they would keep this up for over a decade.

It relates to a phenomena of SPAM comments on the Internet, on random websites, seemingly about key lime pie (pictured), sentences eventually devolving into pornographic proportions of nonsense. The thing is, that while a spambot may be to blame, it’s difficult to explain why they would be advertising pie of all things, and why they would keep this up for over a decade.