Hi,

As you know (or maybe you don’t? Who knows how you got here) I’ve made an operating system kernel from scratch a while ago. Now the date of the page is right, the thing was put together in 2008, but then wasn’t touched again after 2013. The reason why this happened is because I realized that in order to achieve frame rates higher than 16-ish FPS (which is enabled by VideoBIOS), I would have to implement every graphics driver ever, for any card that ever existed, which did not seem like a worthwhile use of my time. 😛

I was explaining this to a coworker not long ago and this made the subject fresh in my mind. I remembered the event driven architecture I had in plan for this kernel… The idea was to let the kernel allocate requested system resources directly (such as clock intervals, screen regions, I/O messages, and so forth) and allow for an unbroken chain of events from the hardware directly into the individual apps, to entirely avoid polling of any kind, which is commonly used in modern operating systems (and is inherently inefficient).

This morning I suppose my mind was still processing this idea and I had a dream about multiprocessing. It came with visuals of hardware architecture and all, I think I saw a FM2 socket, it seems my subconscious is fairly well-versed in technology. 😛

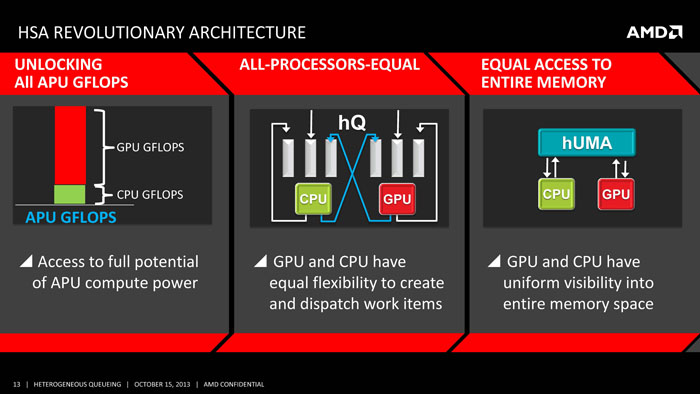

Jokes aside, I was always fascinated by heterogeneous multiprocessing. That is… processing using multiple CPUs, where the different CPUs are different and do different things. It’s an idea AMD tackled with quite a bit while trying to marry GPU architecture (which is mainly parallel) with CPU architecture (which is heavily serial), starting out with the Geode cores and eventually arriving at the modern Ryzen APUs. It was also an idea visited by the Cell line of processors, although I suspect that move was more based in cost saving than performance hunting.

The natural assumption is, that since the different cores are good at different things, in tandem they should be better at a random workload than a homogeneous CPU, which does one thing well and others worse. In reality this is not the case, as much of the performance of modern machines comes from carefully timing the delays between the individual pieces of hardware and the difference between a slow and a fast program is mainly in how well the program catches the rhythm of the underlying hardware. A heterogeneous high performance system is always going to be fairly uncommon and therefore no programs are optimized for it, yielding poor overall performance.

However, there are use cases, such as for example the management controller on a server. It is usually a not very powerful secondary CPU, that runs an entirely separate system that watches for failures in the main system and acts to correct. The main advantage is that it is independent and therefore still able to act in case the main system becomes inoperable.

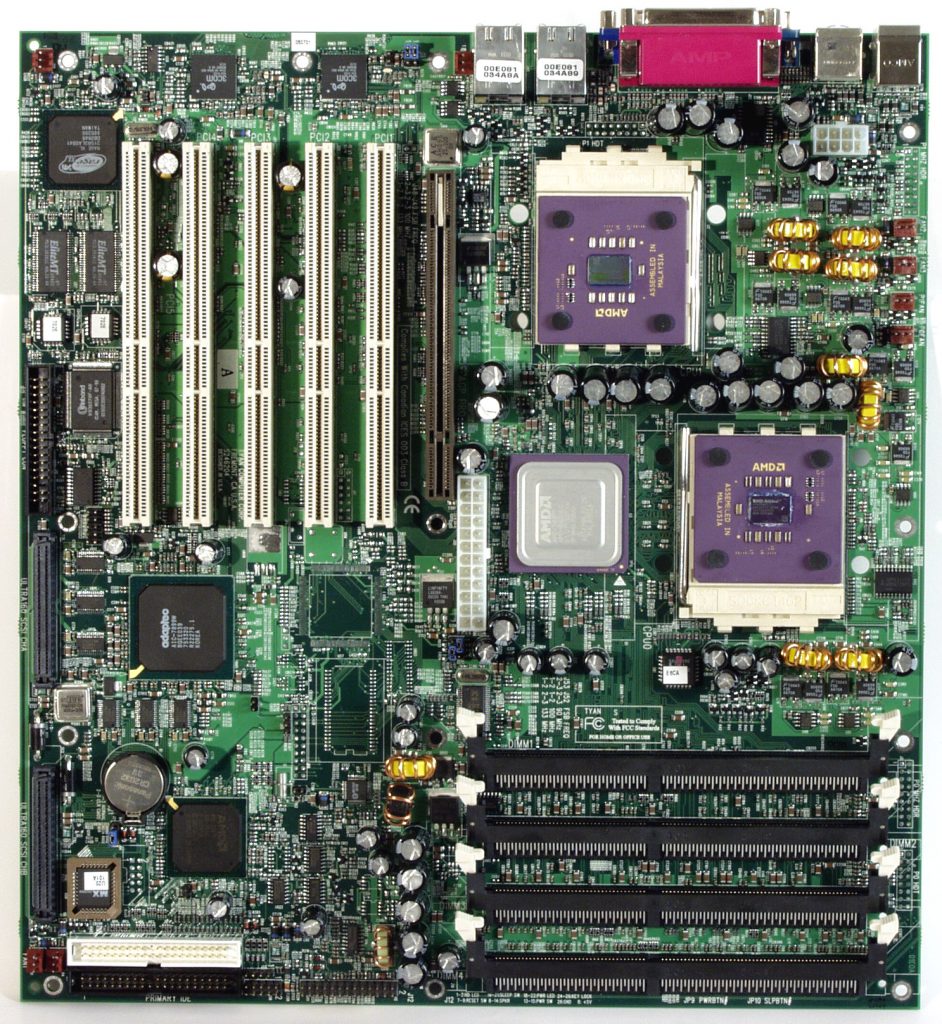

The role of a main CPU on a motherboard is of course fairly obviously separate from the other micro-controllers on a typical PC motherboard, even in cases where the CPU is on a standardized interconnect:

The idea suggested in my dream was that you could run one of the CPU cores (or a second CPU on the motherboard) with an entirely separate program, from the main OS. In reality, this would work, if the CPU worked with it’s own independent memory and didn’t have any conflict or need to share other hardware with the other CPU. Which begs the question regarding the use case. 😛

As described before, while this would be pretty cool, it would obviously not offer a performance advantage as — modern computers are designed to have all CPUs share the same OS and have very similar roles, sometimes even shared cache, which my idea is in great conflict with. There is also no advantage to having a supercharged management controller as management tasks are just not that elaborate.

When a computer boots, it uses a single CPU, however soon after the second CPU (or third, etc) is given something to do by pointing it to a memory location and letting it do it’s thing. At that point in the process, the programmer has to be aware that the second CPU will be executing simultaneously and independently of his main CPU boot thread and therefore the problems involved in multi-threaded processing become relevant. You are basically booting multiple computers at the same time and sharing every running program between them.

The performance of the system at this point, depends on how well the operating system orchestrates all the components and their workloads, so that on the one hand it acts reliably, and on the other, the timings are not off and every part has something to do without waiting it’s turn. This is why most supercomputers are designed for a specific type of task, despite having petaflops of processing power to work with.

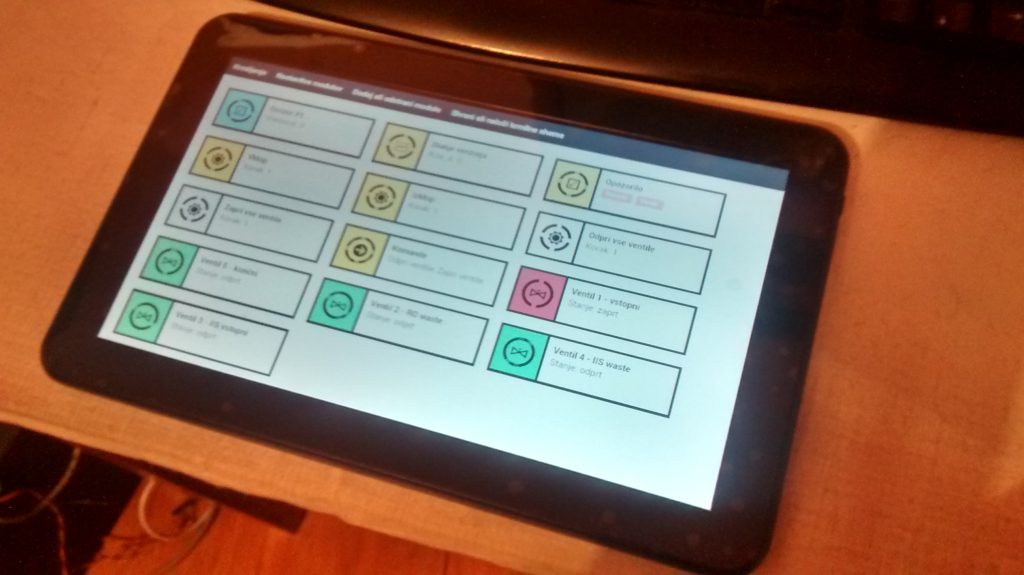

I think as far as my idea goes, trying to figure out how to make it work with hardware is ultimately pointless (at least as long as I don’t have a chip fab at my disposal). When I first realized I would have to code drivers, my solution was that I wouldn’t use PC hardware, but rather something like a tablet where all parts would all share the same hardware. I think my event-driven concept has value, but if I implemented it today, I would probably go for something like Linux, instead of coding the whole thing myself. Yes, it wouldn’t be as efficient, but it would work and it would be a finite effort.

I think the advantage of such a system is not so much in the raw performance, as it could be in usability. One thing that my hardware-shared and event-driven system offered as an advantage over modern operating systems, is that it enabled usability. One could stop thinking in terms of what a computer has attached, but rather be thinking of the resources of an entire local network collectively (as events could always be transmitted over a network). And I think the useful value here is in simple usability. Use a system with processing power for it’s processing resources and use a portable system with a broad user interface (a tablet) for it’s user interface resources. Migrate resources between compatible devices seamlessly.

When I first thought of tablets for my kernel in 2008, mobile devices were not yet in widespread use. In the 10 years, this has changed. Perhaps the above paragraph will also successfully predict what in technology will change over the next 10 years. Will the cloud become local? We will see.

LP,

Jure